Bibliography

Also see References from the ACL 2016 Tutorial by Luong, Cho and Manning.

Recurrent Neural Networks

- Hochreiter & Schmidhuber, 1997. Long Short-term Memory.

- Bengio et al, IEEE Trans on Neural Networks. Learning long-term dependencies with gradient descent is difficult.

- Mikael Bodén 2002. A guide to recurrent neural networks and backpropagation.

- Pascanu et al, 2013. On the difficulty of training Recurrent Neural Networks.

- Graves et al, ICML 2006. Connectionist Temporal Classification: Labelling Unsegmented Sequence Data with Recurrent Neural Networks.

RNN Language models

- Mikolov et al InterSpeech 2010. Recurrent neural network based language model.

- Mikolov PhD thesis. Statistical Language Models based on Neural Networks.

- Mikolov et al ICASSP 2011. Extensions of recurrent neural network language model.

- Zoph, Vaswani, May, Knight, NAACL’16. Simple, Fast Noise Contrastive Estimation for Large RNN Vocabularies.

- Ji, Vishwanathan, Satish, Anderson, Dubey, ICLR’16. BlackOut: Speeding up Recurrent Neural Network Language Models with very Large Vocabularies.

- Merity et al, 2016. Pointer Sentinel Mixture Models.

- Sundermeyer, Ney, and Schluter, 2015. From feedforward to recurrent lstm neural networks for language modeling.

n-gram Neural Language models

- Bengio, Ducharme, Vincent, Jauvin, JMLR’03. A Neural Probabilistic Language Model.

- Morin & Bengio, AISTATS’05. Hierarchical Probabilistic Neural Network Language Model.

- Mnih & Hinton, NIPS’09. A Scalable Hierarchical Distributed Language Model.

- Mnih & Teh, ICML’12. A fast and simple algorithm for training neural probabilistic language models.

- Vaswani, Zhao, Fossum, Chiang, EMNLP’13. Decoding with Large-Scale Neural Language Models Improves Translation.

- Kim, Jernite, Sontag, Rush, AAAI’16. Character-Aware Neural Language Models.

- Ji, Haffari, Eisenstein, NAACL’16. A Latent Variable Recurrent Neural Network for Discourse-Driven Language Models.

- Wang, Cho, ACL’16. Larger-Context Language Modelling with Recurrent Neural Network.

Neural Machine Translation

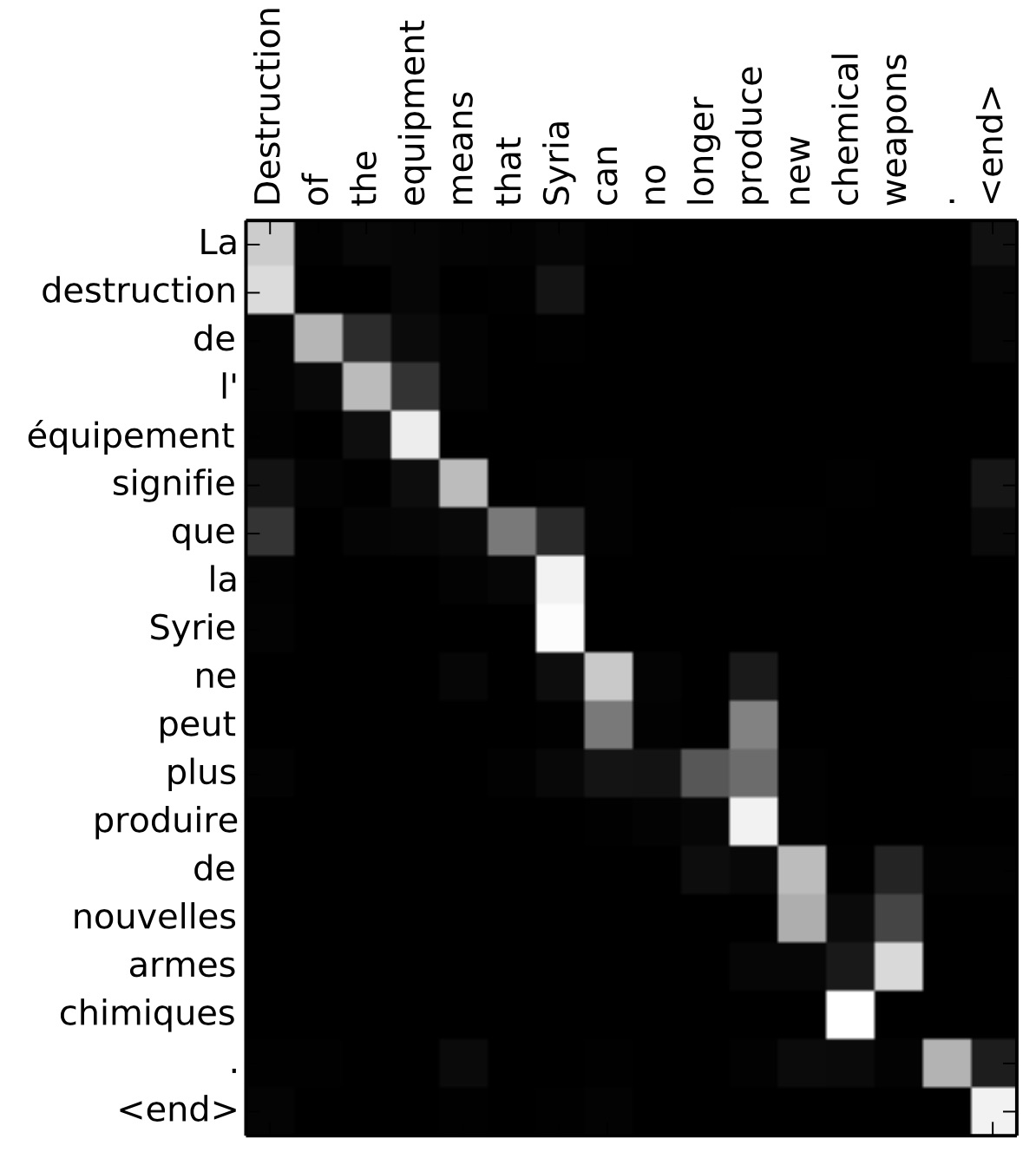

- Bahdanau et al., ICLR’15. Neural Translation by Jointly Learning to Align and Translate.

- Chung, Cho, Bengio, ACL’16. A Character-Level Decoder without Explicit Segmentation for Neural Machine Translation.

- Cohn, Hoang, Vymolova, Yao, Dyer, Haffari, NAACL’16. Incorporating Structural Alignment Biases into an Attentional Neural Translation Model.

- Gu, Lu, Li, Li, ACL’16. Incorporating Copying Mechanism in Sequence-to-Sequence Learning.

- Gulcehre, Ahn, Nallapati, Zhou, Bengio, ACL’16. Pointing the Unknown Words.

- Ling, Luís, Marujo, Astudillo, Amir, Dyer, Black, Trancoso, EMNLP’15. Finding Function in Form: Compositional Character Models for Open Vocabulary Word Representation.

- Luong et al., ACL’15a. Addressing the Rare Word Problem in Neural Machine Translation.

- Luong et al., ACL’15b. Effective Approaches to Attention-based Neural Machine Translation.

- Luong & Manning, IWSLT’15. Stanford Neural Machine Translation Systems for Spoken Language Domain.

- Sennrich, Haddow, Birch, ACL’16a. Improving Neural Machine Translation Models with Monolingual Data.

- Sennrich, Haddow, Birch, ACL’16b. Neural Machine Translation of Rare Words with Subword Units.

- Tu, Lu, Liu, Liu, Li, ACL’16. Modeling Coverage for Neural Machine Translation.

Encoder-Decoder Neural Networks

- Cho+, EMNLP 2014 Learning Phrase Representations using RNN Encoder-Decoder for Statistical Machine Translation.

- Mnih et al., NIPS’14 Recurrent Models of Visual Attention.

- Sutskever et al., NIPS’14. Sequence to Sequence Learning with Neural Networks.

- Xu, Ba, Kiros, Cho, Courville, Salakhutdinov, Zemel, Bengio, ICML’15. Show, Attend and Tell: Neural Image Caption Generation with Visual Attention.

- Jia, Liang, ACL’16. Data Recombination for Neural Semantic Parsing.

Encoder-Decoder Plus Reinforce

- Zaremba and Sutskever, ICLR 2016 Reinforcement learning neural turing machines - revisited.

- Ranzato+, ICLR 2016 Sequence level training with RNNs.

- Bahdanau+, arXiv 2017 An actor-critic algorithm for sequence prediction.

Multi-lingual Neural MT

- Zoph, Knight, NAACL’16. Multi-source neural translation.

- Dong, Wu, He, Yu, Wang, ACL’15. Multi-task learning for multiple language translation.

- Firat, Cho, Bengio, NAACL’16. Multi-Way, Multilingual Neural Machine Translation with a Shared Attention Mechanism.

Neural Word Alignment

- Yang et al, ACL 2013. Word Alignment Modeling with Context Dependent Deep Neural Network.

- Akihiro Tamura, Taro Watanabe and Eiichiro Sumita ACL 2014. Recurrent Neural Networks for Word Alignment Model.

n-gram NMT

- Le et al, NAACL 2012. Continuous Space Translation Models with Neural Networks.

Misc

- Yang et al, EMNLP 2016. Toward Socially-Infused Information Extraction: Embedding Authors, Mentions, and Entities.

- Qu et al, 2016. Named Entity Recognition for Novel Types by Transfer Learning.

- McDonald et al, EMNLP 2005. Flexible Text Segmentation with Structured Multilabel Classification.